CompoSE: Compositional Synthesis and Editing

of 3D Shapes via Part-Aware Control

A novel method for compositional synthesis and editing of 3D shapes

with part-aware control via coarse geometric primitives.

Abstract

Creating and editing high-quality 3D content remains a central challenge in computer graphics.

We address this challenge by introducing CompoSE, a novel method for

Compositional Synthesis and Editing of 3D

shapes via part-aware control.

Our method takes as input a set of coarse geometric primitives (e.g., bounding boxes) that

represent distinct object parts arranged in a particular spatial configuration, and synthesizes

as output part-separated 3D objects that support localized granular (i.e., compositional)

editing of individual parts.

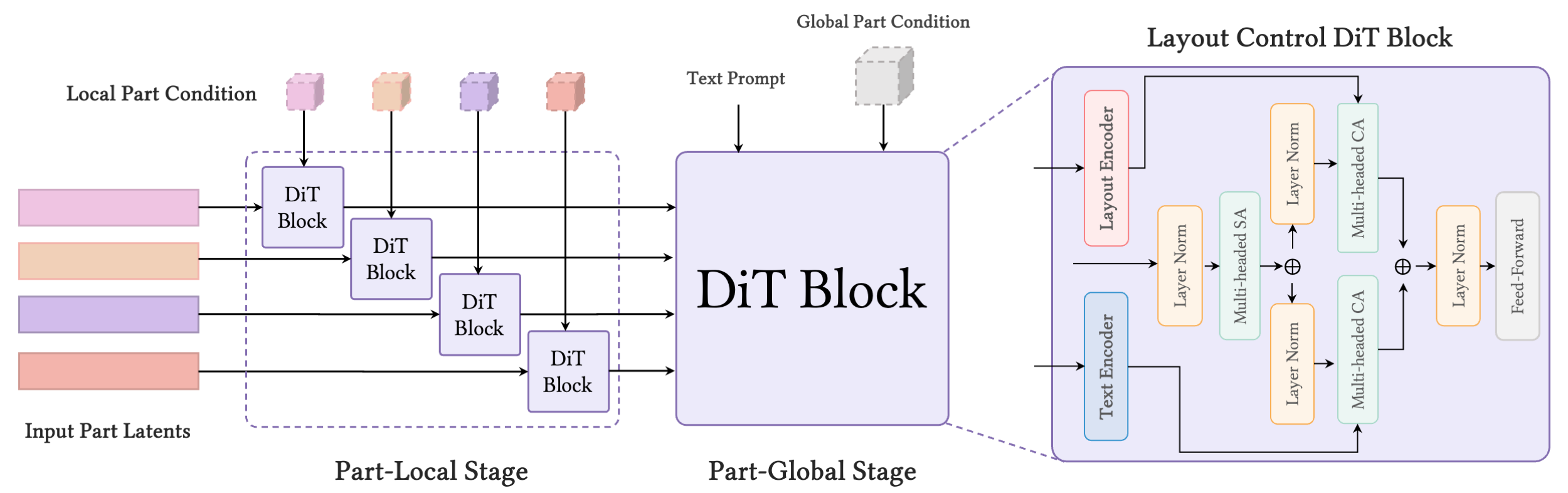

The key insight that enables our method is our use of a diffusion transformer architecture that

alternates between processing each part locally and aggregating contextual information across

parts globally, and features a novel conditioning technique that ensures strong adherence to the

user's input.

Importantly, our method learns to infer part semantics and symmetries directly from coarse

layout guidance, and does not require part-level text prompts.

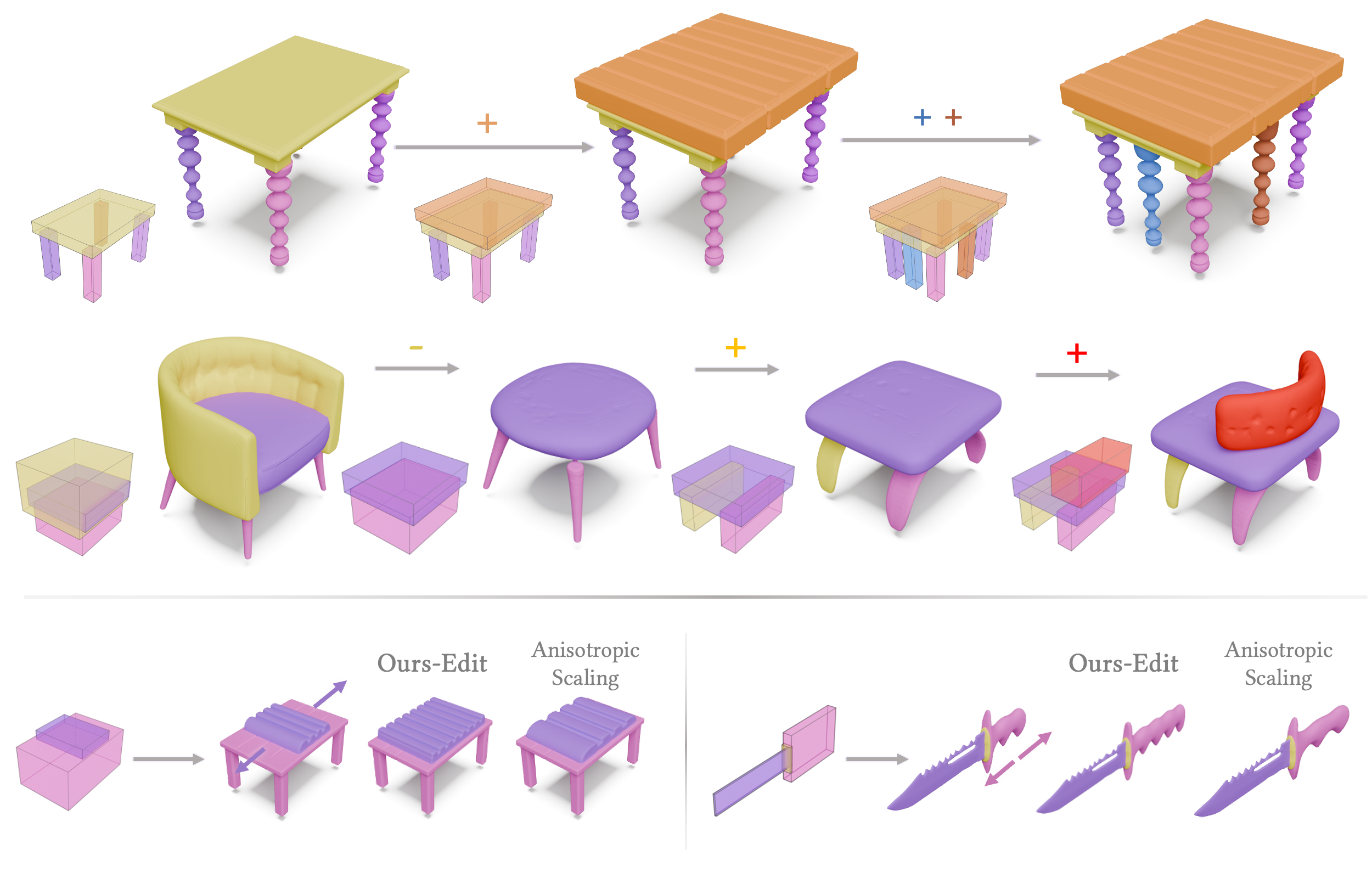

We demonstrate that our method enables powerful compositional editing capabilities, including

context-aware substitution, addition, deletion, and style-preserving resizing operations.

We show through extensive experiments that our method significantly outperforms existing

approaches on a guided synthesis task, as measured by objective metrics and human evaluations.

Demo

CompoSE enables intuitive compositional control over 3D shape synthesis and editing through coarse geometric primitives. The videos below demonstrate key capabilities of our method across different editing operations. Generation time is speeded up for visualization purposes.

We feature context-aware addition of new parts that harmonize with existing geometry. In this example, the added legs respect the style of previously existing legs.

We show generation and addition of new parts that respect existing part semantics and symmetries.

We feature generation and part substitutions following new part layouts and prompts.

Framework

Our diffusion transformer (DiT) architecture for compositional synthesis and editing with part-aware control. Our architecture alternates between local and global stages. Local stages process individual geometric primitives provided by the user; and global blocks aggregate context cues and text information across primitives.

Part-Level Editing

We demonstrate our method's versatility through diverse part-level editing operations. In the first two rows, we showcase edit sequences starting from a base shape. We iteratively add parts by creating new part controls, deleting them, or substitute them by changing the text prompt or rescaling without style preservation. In the last row, we showcase two examples of style-preserving rescaling operations.

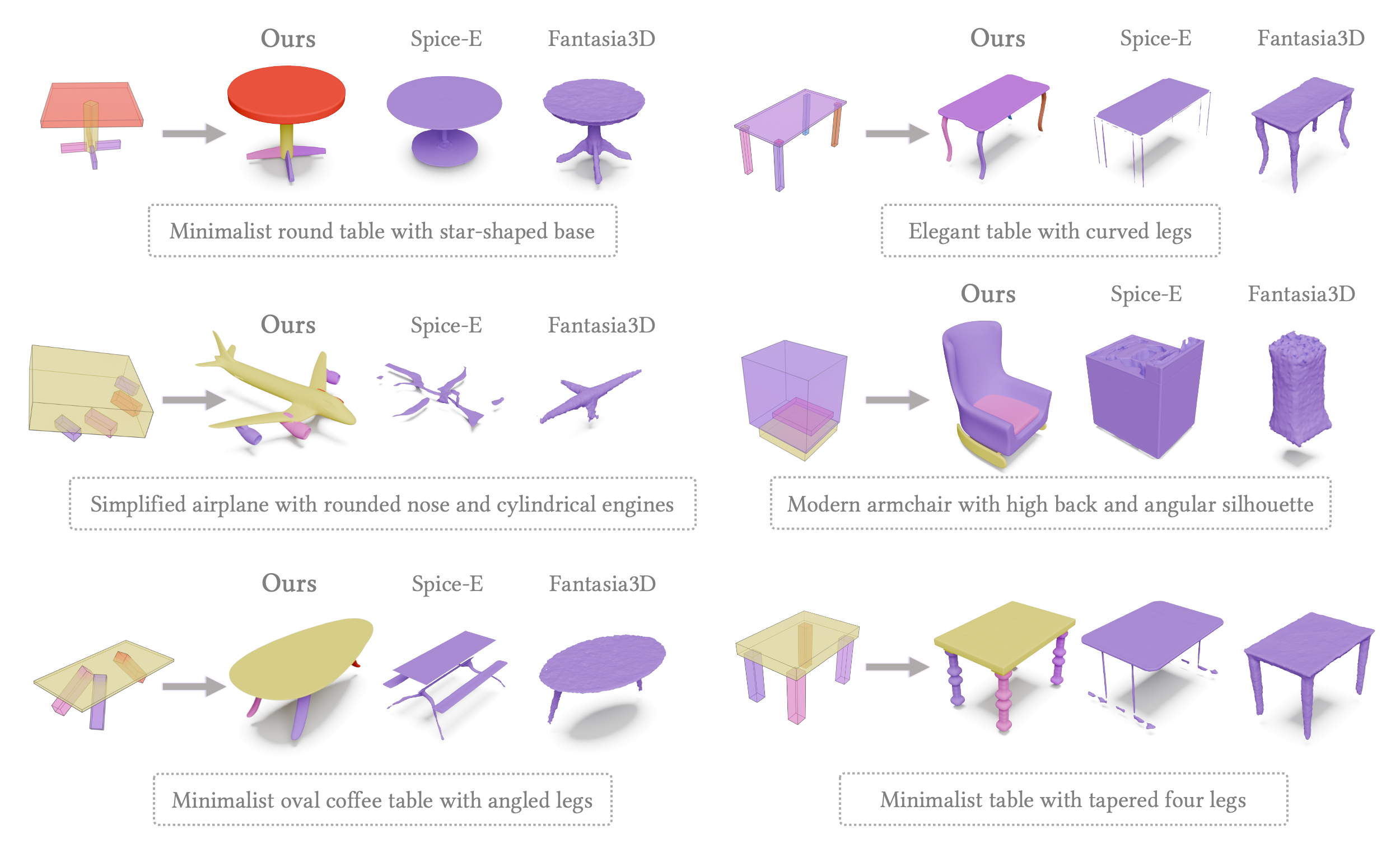

Comparative Results

We compare our method to two guidance-based methods, Spice-E and Fantasia3D. Note that these methods generate holistic shapes without part-level decomposition. We provide baselines with the same coarse guidance shape and text prompt as our method. Unlike baselines that generate holistic shapes, our method produces higher-quality geometry with part-level decomposition without requiring part-level annotations.